For Amusement Purposes Only

The High Corvid of Progressivity

Chance favors the prepared mind.

~ Louis Pasteur

- 30 Posts

- 17 Comments

7·1 month ago

7·1 month agoThere’s also an astroturfing campaign against it as well over there - I’ve noticed a lot of bot comments and bullshit when I post links to here from there.

1·1 month ago

1·1 month agoHaven’t done it myself yet, but here’s the docker install guide… seeing what your username is and all…

Lotta smarter people than me have already posted better answers in this thread, but this really stood out to me:

the thing is. my queries are not that complex. they simply go through the whole table to identify any duplicates which are not further processed then, because the processing takes time (which we thought would be the bottleneck). but the time savings to not process duplicates seems now probably less than that it takes to compare batches with the SQL table

Why aren’t you de-duping the table before processing? What’s inserting these duplicates and why are they necessary to the table? If they serve no purpose, find out what’s generating them and stop it, or write a pre-load script to clean it up before your core processing queries access that table. I’d start here - it sounds like what’s really happening is that you’ve got a garbage query dumping dupes into your table and bloating your db.

6·1 month ago

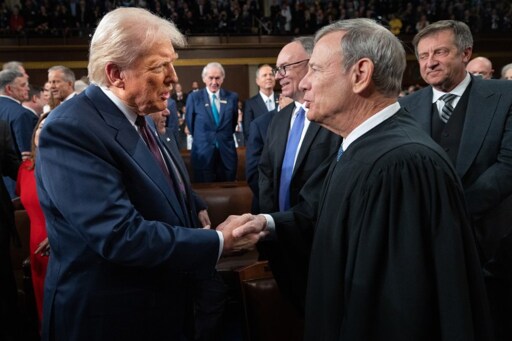

6·1 month agoTrump.

Edit: QuarterSwede beat me to it, so I’ll go for Musk as a close second.

0·1 month ago

0·1 month agoIt’s time for a nationwide movement to dox ICE agents.

This is almost exactly what happened to me on Monday, resulting in a fifteen hour day.

My particular jenga piece was an Access query that none of my predecessors had deigned to document or even tell me about… but was critical to run monthly or you had obsolete data embedded deep within multi-million dollar reports.

Thank god I don’t work on salary anymore, or I’d be really upset.

cackles manically in DAX

It depends on how long you use it:

Year 1: Ok, this is kinda cool, but why does it keep fucking breaking?

Year 2: How is it still fucking breaking?

Year 3: I just don’t fucking care why it keeps breaking. I think I hate this program.

Year 4: I hate this program

Year 5: Let the hate flow through you, consume you. Feel the dark side flowing through your fingertips. Yes. Good. Why is it breaking? It’s the end users. Yes… they’ve been plotting against you from the beginning - hiding columns, erasing formulas and even…

merging cells

Que heavy breathing through a respirator.

Year 6: It’s a board meeting. They ask you if you can average all the moving averages of average sales per month and provide an exponential trendline to forecast growth on five million rows of data.

You say “sure, boss, I can knock that for you in Excel in about an hour or two.”

Your team leader interjects “I believe what he was trying to say was we’ll use Tableau and it will take about a month.”

You turn to him with a steely glare.

“I find your lack of faith disturbing.”

Year 7: Your team leader is gone after you pointed out he fucked up one of your sheets that run the business by merging a cell. All data flows through you and the holy spreadsheet, and the board is terrified of firing you because no one knows how your sheets work but you and their entire inventory system would collapse if you leave.

But then the inevitable happens. Dissension in the ranks. The juniors talk of python, R, Tableau, Power BI - anything to release your dark hold upon the holy data. You could crush them all with a xlookup chain faster than they can type a SELECT statement. The Rebellion is coming, but you’re ready. You’ve discovered the Data Model, capable of building a relational database behind the hidden moons of Power Pivot, parsing tens of millions of rows - and your Death Star is almost complete.

You’re ready to unleash your dark fury when the fucking spreadsheet breaks again.

Year 8: New company. They ask if you know Excel. You just start cackling with a addictive gleam in your eye as tears start streaming down your face.

They hire you on the spot.

All they use is Excel. And Access.

You think, ok, this is kinda cool, but why does it keep fucking breaking?

Post Traumatic Shit Disorder, to be precise.

0·2 months ago

0·2 months agoYa know, Elon…

9·2 months ago

9·2 months agoAgreed (context: same legacy system work, 20 years), although given the size and scale of the tech debt involved, I’d peg it at 5 years if you had a team of 100+ COBOL developers.

10 to do it right.

Once you start dealing with databases older than SQL and languages older than C, things get funky real fast.

0·3 months ago

0·3 months agoSo, who’s gonna tell Lutnick that voter turnout for those age 65 and above is 75%+?

And it’s not like old folks like to complain about things just for the fuck of it, right?

Lutnick and Trump are going to discover why they call Social Security the third rail of American politics.

2·3 months ago

2·3 months agoYou’re correct - the standard tabs can only hold roughly 1.2 million rows.

The way to get around that limitation is to use the Data Model within Power Pivot:

It can accept all of the data connections a standard Power Query can (ODBC, Sharepoint, Access, etc):

You build the connection in Power Pivot to your big tables and it will pull in a preview. If needed, you can build relationship between tables with the Relationship Manager. You can also use DAX to build formulas just like in a regular Excel tab (very similar to Visual Basic). You can then run Pivot Tables and charts against the Data Model to pull out the subsets of data you want to look at.

The load times are pretty decent - usually it takes 2-3 minutes to pull a table of 4 million rows from an SQL database over ODBC, but your results may vary depending on datasource. It can get memory intensive, so I recommend a machine with a decent amount of RAM if you’re going to build anything for professional use.

The nice thing about building it out this way (as opposed to using independent Power Queries to bring out your data subsets) is that it’s a one-button refresh, with most of the logic and formulas hidden back within the Data Model, so it’s a nice way to build reports for end-users that’s harder for them to fuck up by deleting a formula or hiding a column.

2·3 months ago

2·3 months agoSeriously - I can parse multiple tables of 5+ million row each… in EXCEL… on a 10 year old desktop and not have the fan even speed up. Even the legacy Access database I work with handles multiple million+ row tables better than that.

Sounds like the kid was running his AI hamsters too hard and they died of exhaustion.

O my sweet summer child… dictatorships don’t have expiration dates. This will not end with an election. It will end with a revolution.