Last July, San Jose issued an open invitation to technology companies to mount cameras on a municipal vehicle that began periodically driving through the city’s district 10 in December, collecting footage of the streets and public spaces. The images are fed into computer vision software and used to train the companies’ algorithms to detect the unwanted objects, according to interviews and documents the Guardian obtained through public records requests.

Holy Mackerel! Could this be any more of an extremely boring dumb and awful cyberpunk dystopia? Good God!

Maybe it’s to help them.

Don’t tell me, I like the illusion.

From the screen grabs, Since when is a legally street parked RV a homeless encampment? Looks like picking low hanging fruit for campaign talking points.

This sounds like a real opportunity for false positives as opposed to, I dunno, engaging with the community?

quite ironically in this context, san jose is named after st. joseph – he of the legal dad of jesus fame – who was once famously told there was no room at the inn and had to make do in a stable.

Sounds about right for American-christianity.

Only if you’re charging a luxury room price for the stable.

And help them right ? RIGHT ?

Yes and no. San Jose has many many programs to assist the homeless, but some of them are dying in the creeks with flooding. We also have relatively new initiatives for reporting encampment to outreach groups instead of the police.

Not everywhere is a safe place for someone to settle. It’s one thing to have a person spend the night somewhere, but services like these may help identify encampments that are establishing in areas at risk of flooding etc before they get too entrenched.

If only we didn’t live in a dystopia and that was what this was for.

One can only dream i guess.

San Jose’s homeless is a very mixed bag. some wanting to be perpetually homeless, some actual recently loss home and is savable, some on the streets due to drugs (friend had a story where homeless asked for a burger, but refused one from a burger joint nearest by (implied wanted money for drugs)).

Weeding out whose helpable isnt an easy task, because not all homeless share the same reason on how they got to that lifestyle.

This is part of the problem with using terms like “homeless” to describe the occupants of an illegal campsite. There are numerous reasons one may choose to camp in a public space.

- Some are truly struggling to regain their financial footing and either the assistance programs are not helpful or they are unable to utilize them.

- Some are sick which causes them to be unable to participate functionally in society, and they have “fallen through the cracks” of services designed to support them.

- Some reject housing in favor of a lifestyle that demands less effort or accountability – possibly in service of addiction, which ties into #2 above.

All members of society should have access to shelter (or a safe campsite, if that is our preference) and our basic needs met. As members of society, we shall follow laws which describe, for very good reasons, why we cannot simply erect a camping tent in a city park.

The problem with ignoring campsites is plummeting hygiene and safety. Waste is generated by day to day life and must be collected or eliminated. As campers accumulate and abandon the implements of a semi-permanent hovel: furniture, bedding, tarps, etc., the surrounding area transforms into a dumping site.

The technology described in the article already identifies potholes and illegal parking. It does not identify people or their race. Surely it could evolve into something with more potential for abuse, but in its current capacity, it is quite a neutral tool.

We have collected a lot of data on the “ignore and do nothing” solution – the outcome is a scientific certainty. Using tools like this to measure progress (for better or worse) seems like something that would help generate support for other solutions, such as extensive expansion of low-cost/no-cost housing services.

the accuracy for lived-in cars is still far lower: between 10 and 15%

Sounds like the tech isn’t terribly useful

That surely won’t stop governments from throwing millions at it or private companies from taking the money.

It doesn’t have to be accurate to be useful

Case in point: https://en.wikipedia.org/wiki/ADE_651

Basically a dowsing rod, totally incapable of detecting bombs, drugs, etc. But possibly still useful as a probable cause generator.

That was probably one of the more depressing Wiki articles I’ve read in a minute.

I wonder if it could spot Steve

I’m so grateful he’s Canadian

This is the best summary I could come up with:

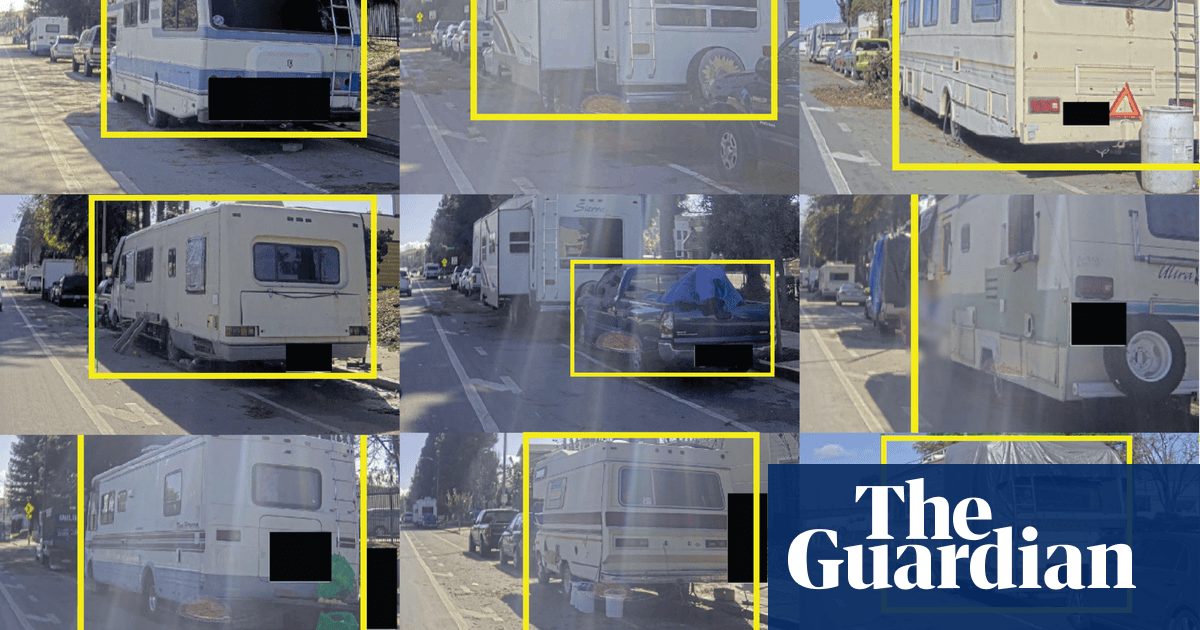

For the last several months, a city at the heart of Silicon Valley has been training artificial intelligence to recognize tents and cars with people living inside in what experts believe is the first experiment of its kind in the United States.

Last July, San Jose issued an open invitation to technology companies to mount cameras on a municipal vehicle that began periodically driving through the city’s district 10 in December, collecting footage of the streets and public spaces.

There’s no set end date for the pilot phase of the project, Tawfik said in an interview, and as the models improve he believes the target objects could expand to include lost cats and dogs, parking violations and overgrown trees.

City documents state that, in addition to accuracy, one of the main metrics the AI systems will be assessed on is their ability to preserve the privacy of people captured on camera – for example, by blurring faces and license plates.

The group, made up of dozens of current and formerly unhoused people, has recently been fighting a policy proposed last August by the San Jose mayor, Matt Mahan, that would allow police to tow and impound lived-in vehicles near schools.

In addition to providing a training ground for new algorithms, San Jose’s position as a national leader on government procurement of technology means that its experiment with surveilling encampments could influence whether and how other cities adopt similar detection systems.

The original article contains 1,487 words, the summary contains 240 words. Saved 84%. I’m a bot and I’m open source!

good bot

Hey look its the bay area. Can it burn down again please. Sincerely some redneck from the IE

Right, why can’t it be a wonderful place to live like Riverside or San Bernardino?

Yeah we’re shitholes but we are damned well aware of it. Only the rich fuckers think otherwise.

it’d be a shame if a .22 round found it’s way through the casing of that camera. A real damn shame for sure.

More like the camera will be triggering a mini gun to clear out the area with great precision.

AI policing has begun.

They’ve already been using it to give probably cause and as evidence that all black people are the same and therefore guilty. I’m referring to facial recognition

In terms of legal precedent this may be a good thing in the long run.

The software billed as “AI” these days is half baked. If one or more law enforcement agencies point to the new piece of software the city deployed as their probable cause to make an arrest it won’t take long for that to get challenged in court.

This sets the stage for the legality of the software to be challenged now (in half baked form) and to set a legal standard demanding high accuracy and/or human assessment when making an arrest.

I think you’re more optimistic than I am about a conservative appeals court judge being able to first understand that the technology works very well, then actually give a shit if they do.

I’m arguing against the technology. I believe that the decision to make an arrest should fall to a human being and that individual should be allowed to override a bad call by the shit being billed as AI.

There’s a real possibility that law enforcement agencies may try to foist responsibility for decisions onto software and require officers to abide by the recommendations of said software. That would be a huge mistake.

This kind of software is already illegal in the EU. AI cannot be used for surveillance or to make decisions about people or arrest them.

Good for the EU I guess?

I was talking about it in the US, where the article is focused.

Yes, but the EU is setting legal precident here that American legislation should follow.

Oh boy, I have some news for you …

Defeatism only helps the thugs who benefit from chaos.

disgraceful. If I ever meet somebody involved in this…

They start out identifying the various “races” probably. I’m a brown person and would like to keep reminding everyone that different races do not exist in the sense that it is not a scientific term with any meaning. A term with proper meaning is “species” and there is only one “homosapiens”… it’s not just Juantastic who lives under the bridge, it’s all of us. We are all a single family. Anyway, would you let your brother or sister or parents or relatives go live under a bridge and hungry? Nah right? What if they were thousands of miles away and didn’t have a place to sleep in? Still nah! You would do whatever to try to help! So why are there homeless people in every city and why do we not help Gaza and Ukraine people? Right? We need to do a better job!

Every year California is becoming more like Night City. Cyperpunk is supposed to be a dystopia, not an aspiration.

This might actually get struck down on constitutionality. How does one confront their accuser in court if the accuser is a trained neural net?

And that’s without even touching on the fact that ML is stochastic in nature, and should absolutely not be considered accurate enough to be an unsupervised and unmoderated single-point-of-failure decision engine in contexts like legal, medical, or other critical decision-making process. The fact that ML regularly and demonstrably hallucinates (or otherwise yields garbage output) is just not acceptable in a regulatory sense.

Source: software engineer in biotech; we are specifically disallowed from using ML at any level in our work for the above reasons, as well as potential HIPAA-related data mining issues.

I don’t know much about jurisprudence, but wouldn’t the neural net be a tool of the person that brought the lawsuit.

Like if you get brought in due to DNA, you don’t have to face the centrifuge that helped extract your DNA from the sample?

You’re ignoring the fact that using such a failure-prone system to initiate legal proceedings against a citizen is ABSOLUTELY going to overload an already overloaded system. And that’s not even going into the fact that it puts an unjust burden on those falsely accused, or the fact that it’s targeting a segment of the population that’s a lot more likely to go “fuck it, I don’t care, how could things possibly get worse” (read: serious depression, PTSD, other neurodivergences that often correlate with being unhoused). This is by-design.

This is an all-around grade-A shit policy. It’s also a policy designed to treat the symptom instead of the cause. It will make the streets around San Jose look a bit nicer, and in doing so it will harm a lot of people.

I don’t think the idea is to bring criminal proceedings against people. Not sure what they do in San Jose but in cities I’ve lived, homeless people are essentially immune to fines or criminal charges because police know they can’t/wont pay anything. So they go force them to move and throw away their belongings if they can’t or don’t take them in time, but do not arrest or ticket these people.

I think it’s a stupid policy but I don’t see how any of this is applicable. If the AI identifies an encampment, it’s going to be police that come and scare them off. This isn’t like a red light camera where you get mailed a ticket because there’s no address to send a ticket to and the AI isn’t going to be able to identify individuals occupying a tent.

I mean I’m not ignoring those facts. I prefaced by saying I don’t know much about jurisprudence.

Thanks for providing some insight though.

For what it’s worth, I didn’t intend to come off stabby or dismissive

No problem.

Appreciate you clarifying. Have a nice day random person.