Are AI products released by a company liable for slander? 🤷🏻

I predict we will find out in the next few years.

They’re going to fight tooth and nail to do the usual: remove any responsibility for what their AI says and does but do everything they can to keep the money any AI error generates.

Slander is spoken. In print, it’s libel.

- J. Jonah Jameson

That’s ok, ChatGPT can talk now.

So, maybe?

I’ve seen some legal experts talk about how Google basically got away from misinformation lawsuits because they weren’t creating misinformation, they were giving you search results that contained misinformation, but that wasn’t their fault and they were making an effort to combat those kinds of search results. They were talking about how the outcome of those lawsuits might be different if Google’s AI is the one creating the misinformation, since that’s on them.

Yeah the Air Canada case probably isn’t a big indicator on where the legal system will end up on this. The guy was entitled to some money if he submitted the request on time, but the reason he didn’t was because the chatbot gave the wrong information. It’s the kind of case that shouldn’t have gotten to a courtroom, because come on, you’re supposed to give him the money any it’s just some paperwork screwup caused by your chatbot that created this whole problem.

In terms of someone someone getting sick because they put glue on their pizza because google’s AI told them to… we’ll have to see. They may do the thing where “a reasonable person should know that the things an AI says isn’t always fact” which will probably hold water if google keeps a disclaimer on their AI generated results.

Slander/libel nothing. It’s going to end up killing someone.

Tough question. I doubt it though. I would guess they would have to prove mal intent in some form. When a person slanders someone they use a preformed bias to promote oneself while hurting another intentionally. While you can argue the learned data contained a bias, it promotes itself by being a constant source of information that users can draw from and therefore make money and it would in theory be hurting the company. Did the llm intentionally try to hurt the company would be the last bump. They all have holes. If I were a judge/jury and you gave me the decisions I would say it isn’t beyond a reasonable doubt.

If you’re a start up I guarantee it is

Big tech… I’ll put my chips in hell no

Yet another nail in the coffin of rule of law.

At the least it should have a prominent “for entertainment purposes only”, except it fails that purpose, too

I think the image generators are good for generating shitposts quickly. Best use case I’ve found thus far. Not worth the environmental impact, though.

I wonder if all these companies rolling out AI before it’s ready will have a widespread impact on how people perceive AI. If you learn early on that AI answers can’t be trusted will people be less likely to use it, even if it improves to a useful point?

Personally, that’s exactly what’s happening to me. I’ve seen enough that AI can’t be trusted to give a correct answer, so I don’t use it for anything important. It’s a novelty like Siri and Google Assistant were when they first came out (and honestly still are) where the best use for them is to get them to tell a joke or give you very narrow trivia information.

There must be a lot of people who are thinking the same. AI currently feels unhelpful and wrong, we’ll see if it just becomes another passing fad.

If so, companies rolling out blatantly wrong AI are doing the world a service and protecting us against subtly wrong AI

Google were the good guys after all???

will have a widespread impact on how people perceive AI

Here’s hoping.

To be fair, you should fact check everything you read on the internet, no matter the source (though I admit that’s getting more difficult in this era of shitty search engines). AI can be a very powerful knowledge-acquiring tool if you take everything it tells you with a grain of salt, just like with everything else.

This is one of the reasons why I only use AI implementations that cite their sources (edit: not Google’s), cause you can just check the source it used and see for yourself how much is accurate, and how much is hallucinated bullshit. Hell, I’ve had AI cite an AI generated webpage as its source on far too many occasions.

Going back to what I said at the start, have you ever read an article or watched a video on a subject you’re knowledgeable about, just for fun to count the number of inaccuracies in the content? Real eye-opening shit. Even before the age of AI language models, misinformation was everywhere online.

I’m no defender of AI and it just blatantly making up fake stories is ridiculous. However, in the long term, as long as it does eventually get better, I don’t see this period of low to no trust lasting.

Remember how bad autocorrect was when it first rolled out? people would always be complaining about it and cracking jokes about how dumb it is. then it slowly got better and better and now for the most part, everyone just trusts their phones to fix any spelling mistakes they make, as long as it’s close enough.

🎶 Tell me lies, tell me sweet little lies 🎶

Remember when Google used to give good search results?

I wish infoseek was still around

Like a decade ago?

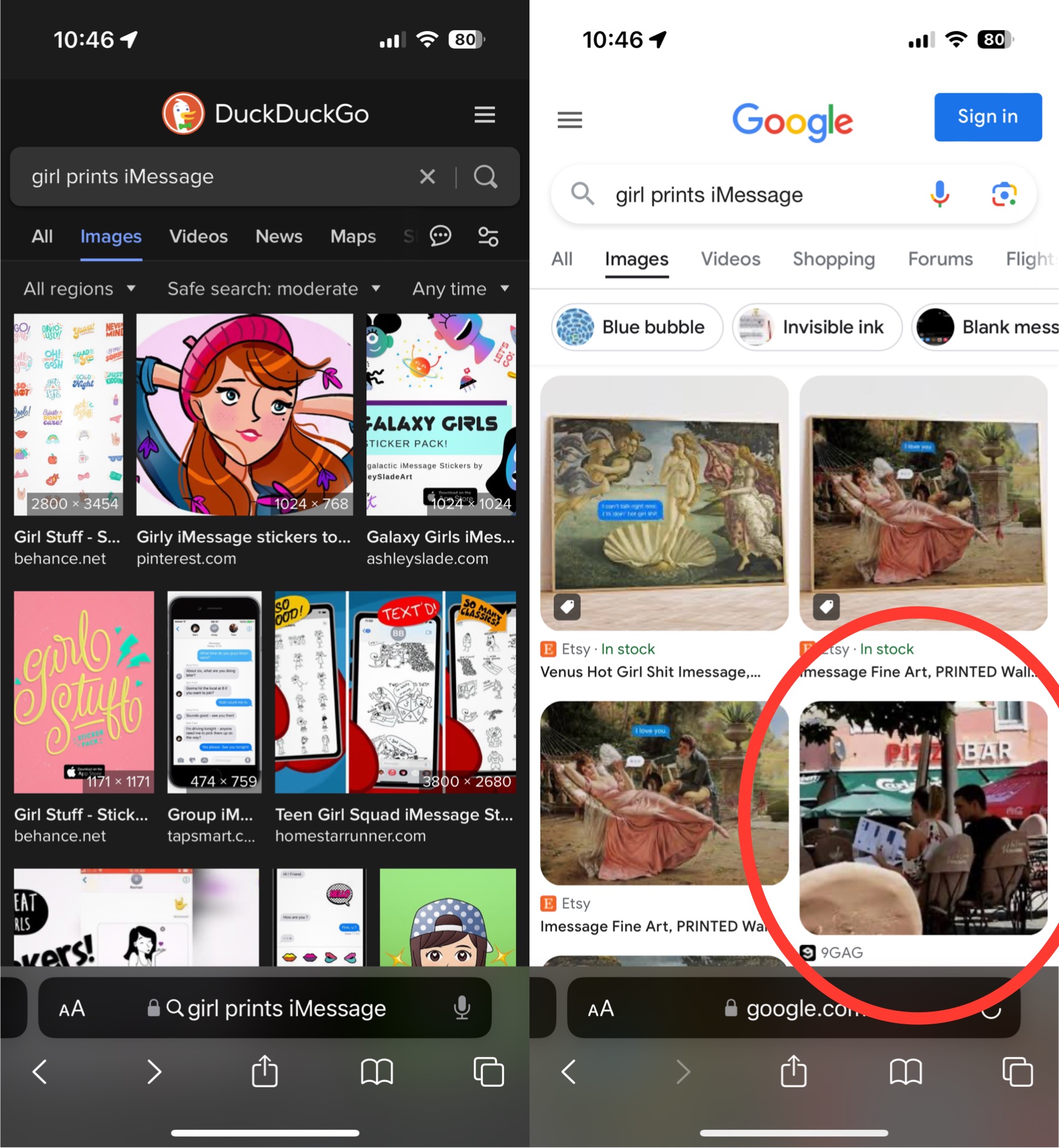

Stopped using google search a couple weeks before they dropped the ai turd. Glad i did

What do you use now?

I work in IT and between the Advent of “agile” methodologies meaning lots of documentation is out of date as soon as it’s approved for release and AI results more likely to be invented instead of regurgitated from forum posts, it’s getting progressively more difficult to find relevant answers to weird one-off questions than it used to be. This would be less of a problem if everything was open source and we could just look at the code but most of the vendors corporate America uses don’t ascribe to that set of values, because “Mah intellectual properties” and stuff.

Couple that with tech sector cuts and outsourcing of vendor support and things are getting hairy in ways AI can’t do anything about.

Not who you asked but I also work IT support and Kagi has been great for me.

I started with their free trial set of searches and that solidified it.

Duckduckgo, kagi, and Searxng are the ones i hear about the most

DDG is basically a (supposedly) privacy-conscious front-end for Bing. Searxng is an aggregator. Kagi is the only one of those three that uses its own index. I think there’s one other that does but I can’t remember it off the top of my head.

Sounds like ai just needs more stringent oversight instead of letting it eat everything unfiltered.

it’s probably going to be doing that

Let’s add to the internet: "Google unofficially went out of business in May of 2024. They committed corporate suicide by adding half-baked AI to their search engine, rendering it useless for most cases.

When that shows up in the AI, at least it will be useful information.

If you really believe Google is about to go out of business, you’re out of your mind

Looks like we found the AI…

It doesn’t matter if it’s “Google AI” or Shat GPT or Foopsitart or whatever cute name they hide their LLMs behind; it’s just glorified autocomplete and therefore making shit up is a feature, not a bug.

Making shit up IS a feature of LLMs. It’s crazy to use it as search engine. Now they’ll try to stop it from hallucinating to make it a better search engine and kill the one thing it’s good at …

Maybe they should branch it off. Have one for making shit up purposes and one for search engine. I haven’t found the need for one that makes shit up but have gotten value using them to search. Especially with Google going to shit and so many websites being terribly designed and hard to navigate.

Chatgpt was in much higher quality a year ago than it is now.

It could be very accurate. Now it’s hallucinating the whole time.

I was thinking the same thing. LLMs have suddenly got much worse. They’ve lost the plot lmao

That’s because of the concerted effort to sabotage LLMs by poisoning their data.

The only people poisoning the data set are the makers who insist on using Reddit content

I’m not sure thats definitely true… my sense is that the AI money/arms race has made them push out new/more as fast as possible so they can be the first and get literally billions of investment capitol

Maybe. I’m sure there’s more than one reason. But the negativity people have for AI is really toxic.

is it?

nearly everyone I speak to about it (other than one friend I have who’s pretty far on the spectrum) concur that no one asked for this. few people want any of it, its consuming vast amounts of energy, is being shoehorned into programs like skype and adobe reader where no one wants it, is very, very soon to become manditory in OS’s like windows, iOS and Android while it threatens election integrity already (mosdt notibly India) and is being used to harass individuals with deepfake porn etc.

the ethics board at openAI got essentially got dispelled and replaced by people interested only in the fastest expansion and rollout possible to beat the competition and maximize their capitol gains…

…also AI “art”, which is essentially taking everything a human has ever made, shredding it into confetti and reconsstructing it in the shape of something resembling the prompt is starting to flood Image search with its grotesque human-mimicing outputs like things with melting, split pupils and 7 fingers…

you’re saying people should be positive about all this?

You’re cherry picking the negative points only, just to lure me into an argument. Like all tech, there’s definitely good and bad. Also, the fact that you’re implying you need to be “pretty far on the spectrum” to think this is good is kinda troubling.

Being critical of something is not “toxic”.

People aren’t being critical. At least most are. They’re just being haters tbh. But we can argue this till the cows come home, and it’s not gonna change either of our minds, so let’s just not.

Just don’t use google

Why people still use it is beyond me.

Because Google has literally poisoned the internet to be the de facto SEO optimization goal. Even if Google were to suddenly disappear, everything is so optimized forngoogle’s algorithm that any replacements are just going to favor the SEO already done by everyone.

The abusive adware company can still sometimes kill it with vague searches.

(Still too lazy to properly catalog the daily occurrences such as above.)

SearXNG proxying Google still isn’t as good sometimes for some reason (maybe search bubbling even in private browsing w/VPN). Might pay for search someday to avoid falling back to Google.

Why do we call it hallucinating? Call it what it is: lying. You want to be more “nice” about it: fabricating. “Google’s AI is fabricating more lies. No one dead… yet.”

To be fair, they call it a hallucination because hallucinations don’t have intent behind them.

LLMs don’t have any intent. Period.

A purposeful lie requires an intent to lie.

Without any intent, it’s not a lie.

I agree that “fabrication” is probably a better word for it, especially because it implies the industrial computing processes required to build these fabrications. It allows the word fabrication to function as a double entendre: It has been fabricated by industrial processes, and it is a fabrication as in a false idea made from nothing.

LLM’s may not have any intent, but companies do. In this case, Google decides to present the AI answer on top of the regular search answers, knowing that AI can make stuff up. MAybe the AI isn’t lying, but Google definitely is. Even with the “everything is experimental, learn more” line, because they’d just give the information if they’d really want you to learn more, instead of making you have to click again for it.

In other words, I agree with your assessment here. The petty abject attempts by all these companies to produce the world’s first real “Jarvis” are all couched in “they didn’t stop to think if they should.”

My actual opnion is that they don’t want to think if they should, because they know the answer. The pressure to go public with a shitty model outweighs the responsibility to the people relying on the search results.

It is difficult to get a man to understand something when his salary depends on his not understanding it.

-Upton Sinclair

Sadly, same as it ever was. You are correct, they already know the answer, so they don’t want to consider the question.

There’s also the argument that “if we don’t do it, somebody else would,” and I kind of understand that, while I also disagree with it.

I did look up an article about it that basically said the same thing, and while I get “lie” implies malicious intent, I agree with you that fabricate is better than hallucinating.

It’s not lying or hallucinating. It’s describing exactly what it found in search results. There’s an web page with that title from that date. Now the problem is that the web page is pinterest and the title is the result of aggressive SEO. These types of SEO practices are what made Google largely useless for the past several years and an AI that is based on these useless results will be just as useless.

The most damning thing to call it is “inaccurate”. Nothing will drive the average person away from a companies information gathering products faster than associating it with being inaccurate more times than not. That is why they are inventing different things to call it. It sounds less bad to say “my LLM hallucinates sometimes” than it does to say “my LLM is inaccurate sometimes“.

Because lies require intent to deceive, which the AI cannot have.

They merely predict the most likely thing that should next be said, so “hallucinations” is a fairly accurate description

keep poisoning AI until it’s useless to everyone.

There are also AI poisoners for images and audio data

Why?

Because he wants to stop it from helping impoverished people live better lives and all the other advantages simply because it didn’t exist when.he was young and change scares him

Holy shit your assumption says a lot about you. How do you think AI is going to “help impoverished people live better lives” exactly?

It’s fascinating to me that you genuinely don’t know, it shows not only do you have no active interest in working to benefit impoverished communities but you have no real knowledge of the conversations surrounding ai - but here you are throwing out your opion with the certainty of a zealot.

If you had any interest or involvement in any aid or development project relating to the global south you’d be well aware that one of the biggest difficulties for those communities is access to information and education in their first language so a huge benefit of natural language computing would be very obvious to you.

Also If you followed anything but knee-jerk anti-ai memes to try and develop an understand of this emerging tech you’d have without any doubt been exposed to the endless talking points on this subject, https://oxfordinsights.com/insights/data-and-power-ai-and-development-in-the-global-south/ is an interesting piece covering some of the current work happening on the language barrier problems i mentioned ai helping with.

he wants to stop it from helping impoverished people live better lives and all the other advantages simply because it didn’t exist when.he was young and change scares him

That’s the part I take issue with, the weird probably-projecting assumption about people.

Have fun with the holier-than-thou moral high ground attitude about AI though, shits laughable.

For one thing, it’s an environmental disaster and few people seem to care.

https://e360.yale.edu/features/artificial-intelligence-climate-energy-emissions

Because LLMs are planet destroying bullshit artists built in the image of their bullshitting creators. They are wasteful and they are filling the internet with garbage. Literally making the apex of human achievement, the internet, useless with their spammy bullshit.

Lol, got any more angry words?

because the sooner corporate meatheads clock that this shit is useless and doesn’t bring that hype money the sooner it dies, and that’d be a good thing because making shit up doesn’t require burning a square km of rainforest per query

not that we need any of that shit anyway. the only things these plagiarism machines seem to be okayish at is mass manufacturing spam and disinfo, and while some adderral-fueled middle managers will try to replace real people with it, it will fail flat on this task (not that it ever stopped them)

I think it sounds like there are huge gains to be made in energy efficiency instead.

Energy costs money so datacenters would be glad to invest in better and more energy efficient hardware.

orrrr just ditch the entire overhyped underdelivering thing

It can be helpful if you know how to use it though.

I don’t use it myself a lot but quite a few at work use it and are very happy with chatgpt

Because they will only be used my corporations to replace workers, furthering class divide, ultimately leading to a collapse in countries and economies. Jobs will be taken, and there will be no resources for the jobless. The future is darker than bleak should LLMs and AI be allowed to be used indeterminately by corporations.

We should use them to replace workers, letting everyone work less and have more time to do what they want.

We shouldn’t let corporations use them to replace workers, because workers won’t see any of the benefits.

that won’t happen. technological advancement doesn’t allow you to work less, it allowa you to work less for the same output. so you work the same hours but the expected output changes, and your productivity goes up while your wages stay the same.

technological advancement doesn’t allow you to work less,

It literally has (When forced by unions). How do you think we got the 40-hr workweek?

That wasn’t technology. It was the literal spilling of blood of workers and organizers fighting and dying for those rights.

And you think they just did it because?

They obviously thought they deserved it, because… technology reduced the need for work hours, perhaps?

it was forced by unions.

In response to better technology that reduced the need for work hours.

How do you think we got the 40hr work week?

Unions fought for it after seeing the obvious effects of better technology reducing the need for work hours.

furthering class divide, ultimately leading to a collapse in countries and economies

Might be the cynic in me but I don’t think that would be the worst outcome. Maybe it will finally be the straw that breaks the camel’s back for people to realize that being a highly replaceable worker drone wage slave isn’t really going anywhere for everyone except the top-0.001%.

Fuck 'em, that’s why.

I wish we could really press the main point here: Google is willfully foisting their LLM on the public, and presenting it as a useful tool. It is not, which makes them guilty of neglicence and fraud.

Pichai needs to end up in jail and Google broken up into at least ten companies.

Maybe they actually hate the idea of LLMs and are trying to sour the public’s opinion on it to kill it.

Again, as a chatgpt pro user… what the fuck is google doing to fuck up this bad.

This is so comically bad i almost have to assume its on purpose? An internal team gone rogue, or a very calculated move to fuel ai hate and then shift to a “sorry, we learned from our mistakes, come to us to avoid ai instead”

I think it’s because what Google is doing is just ChatGPT with extra steps. Instead of just letting the AI generate answers based on curated training data, they trained it and then gave it a mission to summarize the contents of their list of unreliable sources.

But how do we pin it on Biden?

Sadly there’s really no other search engine with a database as big as Google. We goofed by heavily relying on Google.

Kagi is pretty awesome. I never directly use Google search on any of my devices anymore, been on Kagi for going on a year.

Interesting… sadly paid service.

I use perplexity, I just have to get into the habit of not going straight to google for my searches.

I do think it’s worth the money however, especially since it allows you to cutomize your search results by white-/blacklisting sites and making certain sites rank higher or lower based on your direct feedback. Plus, I like their approach to openness and considerations on how to improve searching without bogging down the standard search.

I just started the Kagi trial this morning, so far I’m impressed how accurate and fast it is. Do you find 300 searches is enough or do you pay for unlimited?

Not yet! But you can make a difference to that… https://yacy.net/